replicator kafka This guide describes how to start two Apache Kafka® clusters and then a . Rolex Submariner Date Two-Tone Ceramic Blue Dial 116613LB 2020. for $16,850 for sale from a Trusted Seller on Chrono24. Want to sell a similar watch?

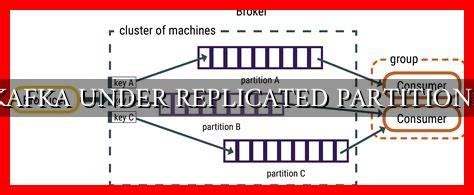

0 · kafka under replicated partitions

1 · kafka replication factor

2 · kafka partition vs replication

3 · kafka data replication

4 · kafka cross region replication

5 · kafka cluster copy and paste

6 · kafka broker failover

7 · copy kafka to another cluster

Discover all the watches of the Speedmaster Moonwatch Professional .

Confluent Replicator allows you to easily and reliably replicate topics from one Kafka cluster to another. In addition to copying the messages, Replicator will create topics as needed .Confluent Replicator is available as a part of Confluent Platform. This tutorial .

This guide describes how to start two Apache Kafka® clusters and then a .

Replicator allows you to easily and reliably replicate topics from one Kafka cluster .Starting in Replicator 5.4.0, dest.kafka.* configurations are no longer mandatory. .Replicator enables hybrid cloud architectures. Replicator provides a way .

One of the more nuanced features of Apache Kafka is its replication protocol. .

This guide describes how to start two Apache Kafka® clusters and then a Replicator process to replicate data between them. Both KRaft and the deprecated ZooKeeper configuration are shown. Note that for tutorial .

Starting in Replicator 5.4.0, dest.kafka.* configurations are no longer mandatory. If these configurations are not supplied, their values are inferred from producer configurations in the .Confluent Replicator allows you to easily and reliably replicate topics from one Apache Kafka® cluster to another. In addition to copying the messages, this connector will create topics as .

Kafka replicator is a tool used to mirror and backup Kafka topics across regions. Features. At-least-once message delivery ( details ). Message ordering guarantee per topic partition. . One of the more nuanced features of Apache Kafka is its replication protocol. Tuning Kafka replication to work automatically, for varying size workloads on a single cluster, is somewhat tricky today. Replication and Partitions are two different things. Replication will copy identical data across the cluster for higher availability/durability. Partitions are Kafka's way to distribute .Confluent Replicator is available as a part of Confluent Platform. This tutorial provides download and install instructions, along with step-by-step guides on how to run Replicator as an .

kafka under replicated partitions

运行Replicator. 这一节描述了在Kafka Connect集群内部如何将Replicator作为不同的connector来运行。为了在Kafka Connect集群里运行Replicator,你首先需要初始化Kafka Connect,在生产环境为了伸缩性和容错将总是使用分布式模式。

kafka replication factor

14. Replicator supports Single Message Transforms (SMTs) (Note: This feature was deprecated as of CP 7.5) Since Replicator is a Kafka Connect connector, it supports SMTs. These transforms allow you to make .Kafka Data Replication. In this module we’ll look at how the data plane handles data replication. Data replication is a critical feature of Kafka that allows it to provide high durability and availability. We enable replication at the topic . Overview of Kafka replication. A Kafka broker stores messages in a topic, which is a logical group of one or more partitions. Partitions are log files on disk with only sequential writes. Kafka guarantees message ordering within a partition. In a nutshell, Kafka is a distributed, real-time, data streaming system that’s backed by a persistent .Replicator is a Kafka Connect Plugin. To run Replicator, you need to take the following steps: Install and Configure the Connect Cluster; Configure and run a Confluent Replicator on the Connect Cluster; This section walks you through both these steps in detail, and reviews the available configuration options for Replicator.

Kafka Replicator is an easy to use tool for copying data between two Apache Kafka clusters with configurable re-partitionning strategy. Data will be read from topics in the origin cluster and written to a topic/topics in the destination cluster according config rules. Features.

Kafka topics are internally divided into a number of partitions. Partitions allow you to parallelize a topic by splitting the data in a particular topic across multiple brokers On the other side replica is the number of copies of each partition you wish to have to achieve fault tolerance incase of a failure Each partition has a preferred leader which handles all the write and read . We use Apache Kafka as a message bus for connecting different parts of the ecosystem. We collect system and application logs as well as event data from the rider and driver apps. Take a look into uReplicator, Uber’s open source solution for replicating Apache Kafka data in a robust and reliable manner.

Kafka Replication High-level Design. The purpose of adding replication in Kafka is for stronger durability and higher availability. We want to guarantee that any successfully published message will not be lost and can be consumed, even when there are server failures.

For a topic replication factor of 3, topic data durability can withstand the loss of 2 brokers. As a general rule, for a replication factor of N, you can permanently lose up to N-1 brokers and still recover your data.. Regarding availability, it is a little bit more complicated.Additional configuration for secure endpoints¶. When the Connect distributed cluster hosting Replicator has a REST endpoint with TLS/SSL authentication enabled (https), you must configure security properties for the TLS/SSL keystore and truststore used by the Replicator monitoring extension to communicate with other Connect nodes in the cluster. In Kafka, the replication factor can be 1 when working on a personal machine. However, in a production environment, where a real Kafka cluster is used, this replication factor should be more than .

In this post, I will walk you through the process of setting up database replication from one source sql database server to multiple destination sql database servers using Apache Kafka and Kafka.Amazon MSK Replicator uses Kafka headers to automatically avoid data being replicated back to the topic it originated from, eliminating the risk of infinite cycles during replication. A header is a key-value pair that can be included with the key, value, and timestamp in each Kafka message. MSK Replicator embeds identifiers for source cluster .

chanel la mousse crème nettoyante anti-pollution à la mousse

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. Learning pathways (24) New Courses New Designing Event-Driven Microservices. . Replicator has an embedded .

mens givenchy sunglasses

Kafka Replication: Design, Leaders, Followers & Partitions Kafka replicates data to more than one broker to ensure fault tolerance. Learn how Kafka replicates partitions, how leader and follower replicas work, and best practices. Tuning Kafka replication to work automatically, for varying size workloads on a single cluster, is somewhat tricky today. One of the challenges that make this particularly difficult is knowing how to prevent replicas from .

Confluent Kafka Replicator al rescate. En esta ocasión, aprovechando las posibilidades y características de escalado y tolerancia a fallos que nos ofrece la plataforma Confluent, vamos a ver cómo el conector Confluent Kafka Replicator nos permite realizar la replicación de datos entre los dos clusters de una manera muy sencilla, . Amazon Managed Streaming for Apache Kafka (Amazon MSK) provides a fully managed and highly available Apache Kafka service simplifying the way you process streaming data. When using Apache Kafka, a common architectural pattern is to replicate data from one cluster to another. Cross-cluster replication is often used to implement business continuity .

In this module, I'm going to talk about geo-replication for Kafka. Single-Region Cluster Concerns First, why do we need geo-replication? Well, there are two main reasons why you need geo-replication. The first reason is for disaster recovery. So if you only have a single region cluster and if that region fails, then your business stops.This topic provides examples of how to migrate from an existing datacenter that is using Apache Kafka® MirrorMaker to Replicator. In these examples, messages are replicated from a specific point in time, not from the beginning. This blog post walks you through how you can use prefixless replication with Streams Replication Manager (SRM) to aggregate Kafka topics from multiple sources. To be specific, we will be diving deep into a prefixless replication scenario that involves the aggregation of two topics from two separate Kafka clusters into a third cluster. At a high level, it's open source and the main difference is how it handles "true" active-active Kafka clusters, not just one way replication or questionable two-way offset manipulation (due to the fact that Replicator is not open source). Thus the other difference - the support model is wider for MM2 than Confluent specific products .

The replicator requires two additional Kafka topics in destination region: Segment events topic: configured with delete cleanup policy and appropriate retention time to discard old segment events. Checkpoint topic: configured with compact cleanup policy to retain only the last checkpoint for each source partition. Setting replication.factor to 2 means that each message sent to one of these 3 topics will be saved to 2 brokers (e.g. messages in topic 1 are stored at broker 1 and broker 2).. Then if one of the brokers dies the other one can take over and still provide access to these messages. In other words, our cluster can survive a single-broker failure without any data loss .

Unlike Replicator and MirrorMaker 2, Cluster Linking does not require running Connect to move messages from one cluster to another, and it creates identical “mirror topics” with globally consistent offsets. We call this “byte-for-byte” replication. . Compared to other Kafka replication options, Cluster Linking offers these advantages .

Amazon MSK Replicator is a feature of Amazon MSK that enables you to reliably replicate data across Amazon MSK clusters in just a few clicks without requiring expertise to setup open-source tools, writing code, or managing infrastructure. MSK Replicator automatically provisions and scales underlying resources, so you can easily build multi-region applications and only pay for .

kafka partition vs replication

$61K+

replicator kafka|kafka cluster copy and paste